Software testing has become an integral part of the development process. It’s even more important nowadays that teams ensure the highest quality product is released, as software must work across multiple platforms and devices with different versions of operating systems. As a result, testing teams must be well-equipped with knowledge and technology solutions to keep up with this ever-evolving landscape. Unfortunately, these key challenges of software testing come with roadblocks that can leave QA leads feeling overwhelmed–until now!

In this blog post series, we’re taking a deep dive into some common issues seen in software testing and how best to overcome them. This is Part 2 of our series, so if you haven’t already read Part 1, check that out first before jumping in here. Now let’s get into it!

Lack of resources

Lack of resources in software testing can lead to several problems that can impact the quality of the software being tested, delay the testing process, and increase the risk of undiscovered defects. Lack of resources leads to insufficient test coverage, incomplete testing, inadequate testing environment, and limited test automation.

To overcome these issues, here are some strategies that software testing managers can use:

Lack of test prioritization

Test prioritization refers to the ideology of giving importance to testing the features or modules of high importance. Prioritizing test cases is essential because not all test cases are equal in terms of their impact on the application or software being tested. Prioritizing helps to ensure that the most critical test cases are executed first, allowing for early identification of major defects or issues. This can also aid in risk management and determining the level of testing required for a particular release or build. By prioritizing test cases, testers can optimize their efforts, reduce testing time, and improve software quality.

When test cases are not appropriately prioritized, it can lead to inadequate testing coverage and result in missed defects. This can have a significant impact on the quality of the software, as well as customer satisfaction levels. To overcome this issue, software testing managers should prioritize testing activities to focus resources on the most critical areas of the software. Additionally, they should implement techniques such as risk-based prioritization or test case optimization to ensure that tests are being executed in the correct order. Finally, teams should continuously review and refine their test plans to remain up-to-date with changing requirements.

Lack of proper test planning

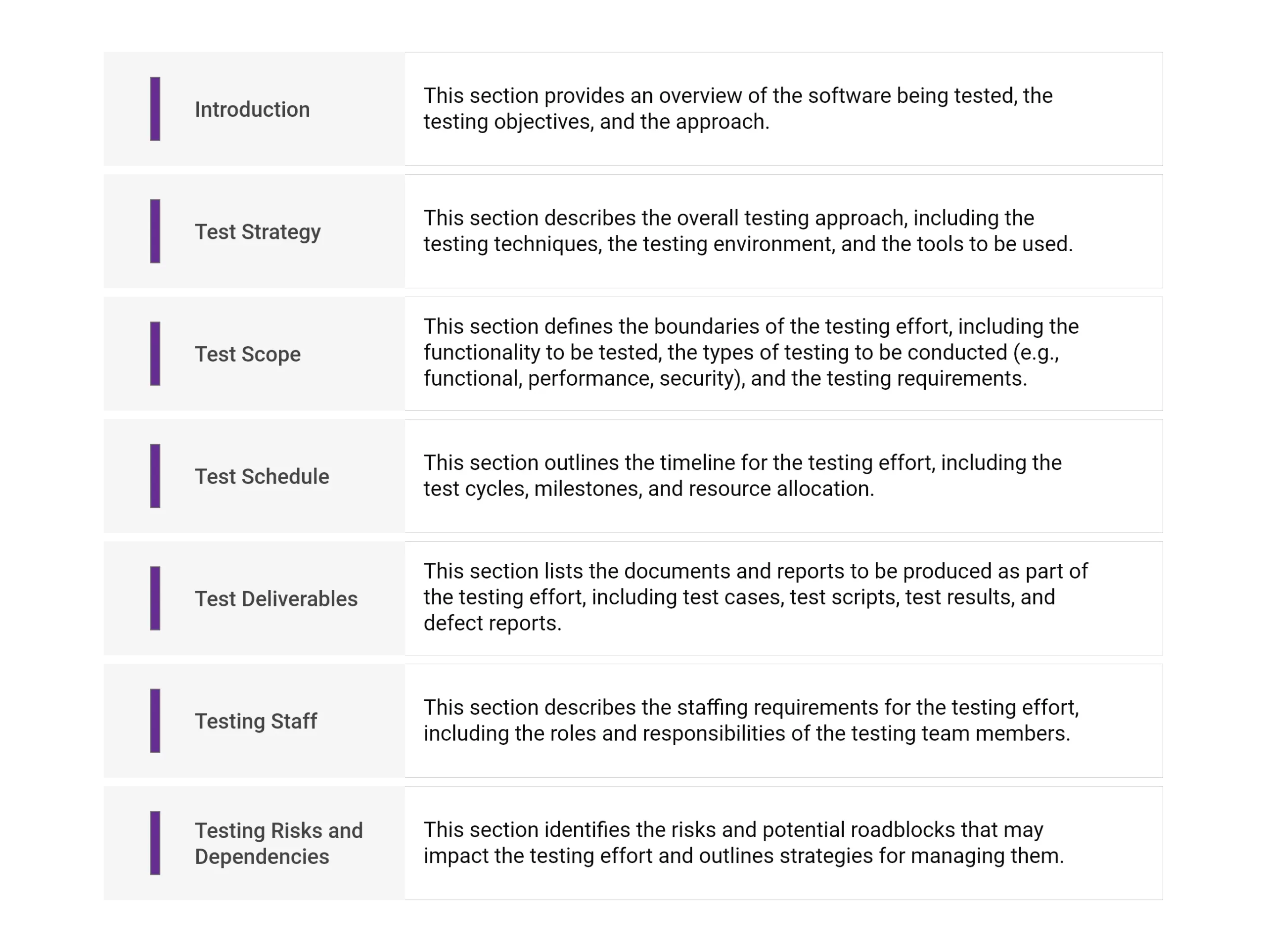

A test plan is a formal document that outlines the strategy, objectives, scope, and approach to be used in a software testing effort. It is typically created during the planning phase of the software development life cycle and serves as a guiding document for the entire testing team.

This results in issues falling through the cracks or being duplicated unnecessarily. The four most common problems are unclear roles and responsibilities, unclear test objectives, ill-defined test documents, and no strong feedback loop.

By creating a well-structured and comprehensive test plan, testing teams can ensure they understand the testing objectives and work efficiently to deliver a high-quality software product.

Here are the elements you need to build an effective test plan:

Test environment duplication

The test environment plays a vital role in the success of software testing, as it provides an isolated environment to run tests and identify potential defects. To ensure reliable results, the test environment should be designed to replicate the software’s target deployment and usage conditions, such as hardware architecture and operating system. This allows testers to uncover defects caused by environmental differences, such as different browser versions or user input formats.

To duplicate the test environment, teams should first thoroughly document the requirements for the test environment. They should also create an inventory of all relevant hardware and software components, including versions and configurations. Once this is done, they can leverage cloud-based technologies such as virtual machines or containers to spin up multiple copies of identical environments quickly and cost-effectively. Additionally, they should configure automated systems to monitor and track changes made to the test environment during testing.

Test data management

Test data is a set of values used to run tests on software programs. It allows testers to validate the behavior and performance of the software against expected outcomes. These data sets can be generated manually or automatically, depending on the complexity of the software under test. Test data can include parameters such as user credentials, system configurations, transaction histories, etc., which can accurately represent real-world scenarios.

If test data is not managed correctly, it can lead to unreliable test results and an inaccurate software assessment. Poorly managed test data can also cause tests to take longer to execute due to inconsistencies in datasets or redundant information. Additionally, incorrect assumptions may be made during testing since the results are not accurately represented. Finally, if test data is not properly archived, it can be challenging to reproduce defects and rerun tests when necessary.

Here are some key steps to consider when managing test data:

Undefined quality standards

Quality standards in software testing refer to a set of expectations for assessing the quality of the software under test. These standards are based on user requirements and industry best practices and typically include accuracy, reliability, performance, scalability, and security criteria.

If quality standards are undefined or unclear, it can confuse testers and developers and affect the overall testing quality. This can result in incorrect assumptions made during tests which may lead to undetected bugs and flaws in the final product.

To ensure good quality standards in testing, it is crucial to define objectives and expectations at the project’s outset. Meetings between stakeholders should also be held periodically throughout development to review progress against specific goals. Additionally, teams should have access to up-to-date test documentation so that they know exactly what is expected from them. Finally, regular code reviews should be conducted by experienced professionals who are knowledgeable about coding best practices.

Lack of traceability between requirements and test cases

Traceability in software testing is the process of keeping track of functional requirements and their associated tests. It is a vital part of quality assurance as it enables teams to confirm that all requirements are being tested properly and provides an audit trail in case any changes need to be made.

If there is no traceability in software testing, it can lead to problems such as undetected bugs and flaws in the final product. It also makes identifying gaps or errors in the process difficult due to the lack of an audit trail. Furthermore, without traceability, reviewing what has been tested against requirements and spotting redundant tests is hard.

Good traceability measures include maintaining detailed records of the different tests conducted, ensuring that all changes to requirements are tracked and recorded, and regularly reviewing tests against requirements. A Requirement Traceability Matrix (RTM) is a tool that maps test cases to their corresponding requirement to give teams an overview of what has been tested. This makes it easier to identify gaps or errors in the process. An RTM can also help identify redundant tests that may be inefficiently consuming resources.

People issues

Conflicts between developers and testers can arise due to differences in their primary roles and objectives. Developers often focus on creating functional code, while testers focus more on product quality. These differing perspectives can lead to disagreements over issues such as when a test should be performed or how much time is allocated to testing.

To resolve these issues, both parties need open communication and a better understanding of each other’s goals. Additionally, setting clearer expectations of what needs to be achieved can help bring clarity and focus to the process. Finally, introducing metrics that measure overall effectiveness can help identify areas for improvement and act as an incentive for collaboration between developers and testers.

Release day of the week

Release management is essential to software development, as it aligns business needs with IT work. Automating day-to-day release manager activities can lead to a misconception that release management is no longer critical and can be replaced by product management.

When products and updates have to be released multiple times a day, manual testing becomes impossible to keep up with accurately. New features pose an even greater challenge with the needed levels of speed, accuracy, and additional testers. As the end of the working week approaches, there’s often a looming deadline between developers and the weekend – leaving little time for necessary tasks such as regression testing before deployment.

Deployment is nothing more than an additional step in the software lifecycle – you can deploy on any given day, provided you are willing to observe how your code behaves in production. If you’re just looking to deploy and get away from it all, then maybe hold off until another day because tests will not give you a proper understanding of how your code will perform in a real environment. In place of DevOps AI, which does automated observation and rollbacks if necessary, teams must ensure their code works as intended – especially on Fridays.

Piecemeal deployments are key for releasing faster and improving code quality – though counterintuitive, doing something frightening repeatedly will help make it mundane and normalize it over time. Releasing frequently can help catch bugs sooner, making teams more efficient in the long run.

Lack of test coverage

Test coverage denotes how much of the software codebase has been tested. It is typically expressed as a percentage, indicating the amount of code being tested in relation to the total size and complexity of the codebase. Test coverage can be used to evaluate the quality of the tests and ensure that all areas of the project have been sufficiently tested, reducing potential risks or bugs in production.

Issues that can occur due to inadequate test coverage include software bugs, decreased reliability and stability of the software, increased risk of security vulnerabilities, and increased dependence on manual testing. Inadequate test coverage can also lead to inefficient development cycles, as unexpected errors may only be found later in the process. Finally, inadequate test coverage can lead to increased maintenance costs as more effort is needed to fix issues after release.

One way to address the problem of lack of test coverage is to ensure that all areas of the codebase are tested. This can be done by utilizing unit, integration, and system-level testing. It is also important to use automation for tests to ensure sufficient coverage. Additionally, it is essential to have rigorous code reviews to detect potential issues early on and set up software engineering guidelines that provide clear standards for coding practices and quality control.

Defect leakage

Defect leakage allows bugs and defects to remain in a codebase, even after tests have been conducted. This can lead to serious issues for software applications and must be addressed as soon as possible.

Defect leakage usually happens when there is insufficient test coverage, where not all areas of the codebase are properly tested. This means that some parts of the application will go unchecked, leading to any potential flaws or bugs being missed. Additionally, if the requirements analysis process was incomplete, certain scenarios or conditions could not be considered during testing. Inadequate bug tracking and tracking processes can also result in undiscovered defects slipping through testing.

The best way to prevent defect leakage is by ensuring all areas of the codebase are thoroughly tested utilizing unit testing, integration testing, and system-level testing. Automation should also be utilized wherever possible to ensure adequate coverage across the entire application. Additionally, rigorous code reviews should be done so any potential issues can be detected early on and corrected before they become more severe problems down the line. Finally, organizations should set software engineering guidelines that help developers create high-quality code while ensuring defects don’t slip through testing unnoticed.

Defects marked as INVALID

Invalid bugs are software defects reported by testers or users but ultimately discarded because they don’t indicate a real issue. These bugs can lead to wasted resources and time as developers and testers work on them without making any progress.

Invalid bugs typically occur due to insufficient testing, where certain areas of the codebase have not been thoroughly tested or covered. This can lead to false positives where software appears to malfunction even though it works properly. Additionally, if the requirements analysis process was incomplete, it’s possible that certain scenarios or conditions weren’t considered during testing, which could also cause invalid bugs to be raised.

The best way to avoid invalid bugs is to ensure quality assurance processes are up-to-date and robust. Testers should perform thorough tests across all areas of the application before releasing a new version – unit testing, integration testing, system-level testing, etc. Automation should also be utilized wherever possible to ensure adequate coverage across the entire application and reduce room for human error in manual testing. Reports from users must be taken seriously – however, each report should be investigated carefully before concluding whether an issue needs further attention.

Running out of test ideas

If a software tester runs out of ideas, it can be a huge problem – they won’t be able to find any more defects. Thus, the quality assurance process will suffer. To overcome this issue, testers should use different methods and approaches to ensure that all application areas are thoroughly tested.

First off, it’s essential to have a clear understanding of the requirements and make sure that these have been considered when testing. It may be helpful for testers to use tools such as Mind Mapping or Fishbone diagrams to organize their thoughts before beginning testing. Additionally, testers should consider using automated testing tools or scripts to cover more ground effectively – repeating certain tests across multiple environments can help find potential bugs faster.

Another valuable approach for finding bugs is exploratory testing, where testers explore the application by trying out different scenarios and use cases that weren’t included in the initial test plans. This approach encourages creativity from the tester and can uncover unexpected issues which were previously unknown. Additionally, bug bounty programs can be set up, which allow external users to report any bugs they find on an application – this way, new ideas can be generated without requiring more input from internal testers.

Struggling to reproduce an inconsistent bug

Inconsistent bugs are software defects due to an inconsistency between different versions or areas of the codebase. These bugs can be difficult to debug because it’s not always immediately obvious why one part of the application behaves differently from another.

Inconsistent bugs usually occur when changes are made to a product, but not every area is updated accordingly. This can lead to some areas being more up-to-date than others, creating discrepancies in behavior between them. Additionally, if developers have used different coding practices for different parts of the codebase, inconsistencies can also arise – such as using a different function in one area and an alternative function in another despite both ultimately performing the same task.

The best way to fix inconsistent bugs is to ensure that all relevant code is up-to-date and consistent across each version and environment. Automation tools should be utilized wherever possible in order to distribute updates quickly and efficiently while keeping track of changes. Additionally, developers need to use similar coding practices throughout each area – this includes using syntactically identical syntax and structure so that discrepancies don’t arise unintentionally. Finally, testers must keep a close eye on newly released versions by performing thorough tests across multiple environments; this will help identify any issues quickly before they become widespread.

Blame Game

The blame game is a common problem in software testing projects that can lead to communication breakdowns between different teams and avoidable mistakes. In order to avoid this issue, it’s crucial for everyone involved to take responsibility for the tasks assigned to them and understand the importance of their role.

An excellent way to prevent blame game scenarios is by having transparent processes in place right from the start. Agree on who is responsible for what tasks, and ensure that progress updates are communicated frequently among all stakeholders. Additionally, holding regular reviews or retrospectives throughout the testing process can help identify issues early before they become serious problems – allowing any necessary changes to be made quickly and effectively.

Setting up an environment of trust is also key to avoiding blame game situations. Team members should feel comfortable discussing challenges without fear of criticism or judgment, resulting in higher-quality output, as issues can be discussed openly without worrying about assigning blame afterward. Additionally, testers should remain focused on understanding the reason behind any errors rather than just pointing out mistakes – this will empower them to suggest solutions instead of simply criticizing others’ work.

Conclusion

Software testing is a complex and challenging process – but with the right systems and processes in place, it’s possible to reduce issues and problems to a minimum. Building trust between different teams, setting up clear guidelines for all stakeholders, and utilizing automated testing tools are key components to ensuring that the software you produce meets the highest quality standard. With these approaches in place, developing and launching robust applications without too much difficulty is possible.