Data modernization and artificial intelligence are taking over the business world. These days, you can’t turn around without hearing phrases like “machine learning” or “digital modernization.”

Every business owner everywhere has at least a small stake in wanting to digitize their business. After all, it’s near impossible to remain relevant without modernizing legacy technology platforms. Data challenges are no stranger to every company on Earth since modernization is the driving factor behind those data challenges.

While many major corporations, big businesses, and modern start-ups have gotten a handle on modernizing their digital processes and embracing cloud computing, smaller but established companies are struggling to make the change. For the most part, these struggles relate to time and capacity.

Data Management in the Modern World

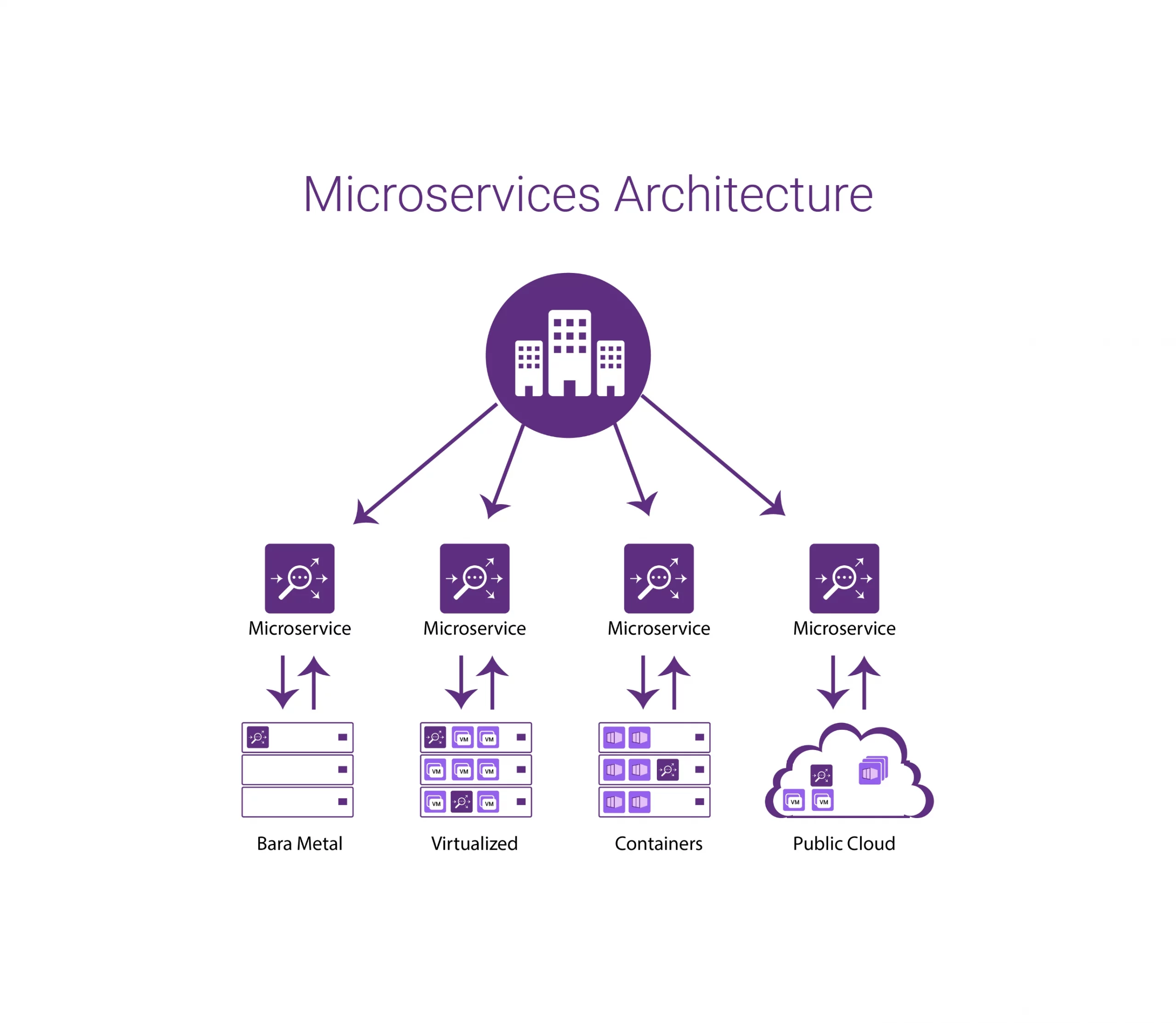

As data management continues to revolutionize, enterprises of all shapes and sizes are experiencing issues with data quality and integrating cloud-based technology platforms. While many businesses are right in the middle of an attempt at modernizing their current data, the way companies keep their data is evolving from an on-premise-centered approach to hybrid architecture.

Shaping Modern Data Architecture

As companies target legacy technology modernization across the globe, leaders in the tech industry have identified some significant players regarding how businesses choose to manage their data. Though the companies may be radically different, the data management elements remain the same.

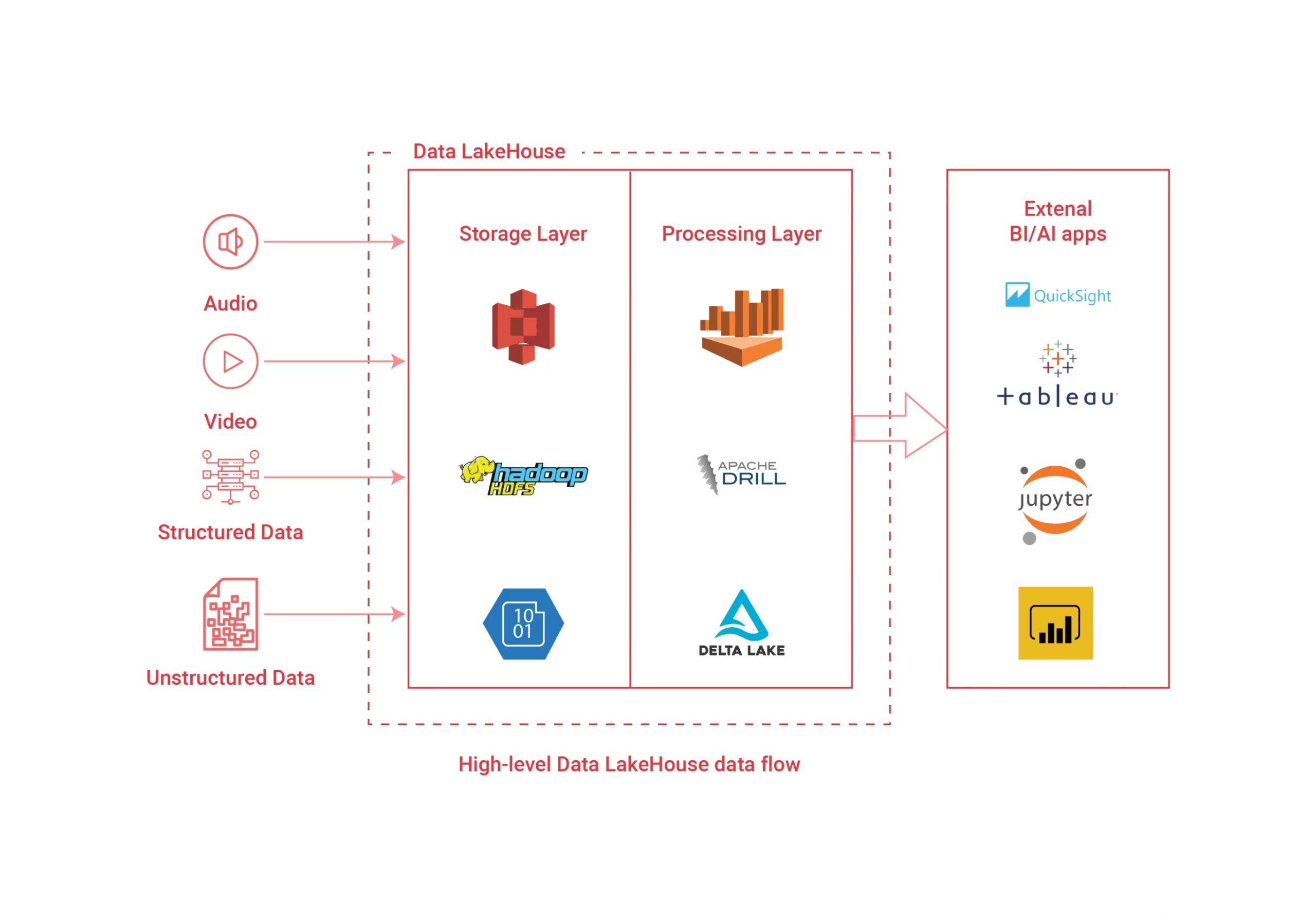

Open-Source Frameworks

These templates for software development, typically designed by a social network of software developers, are extremely common among businesses shifting how they manage their data. Open-source frameworks are free to use, and they allow all companies to access the big data infrastructures necessary to implement modernization.

Cloud-Computing

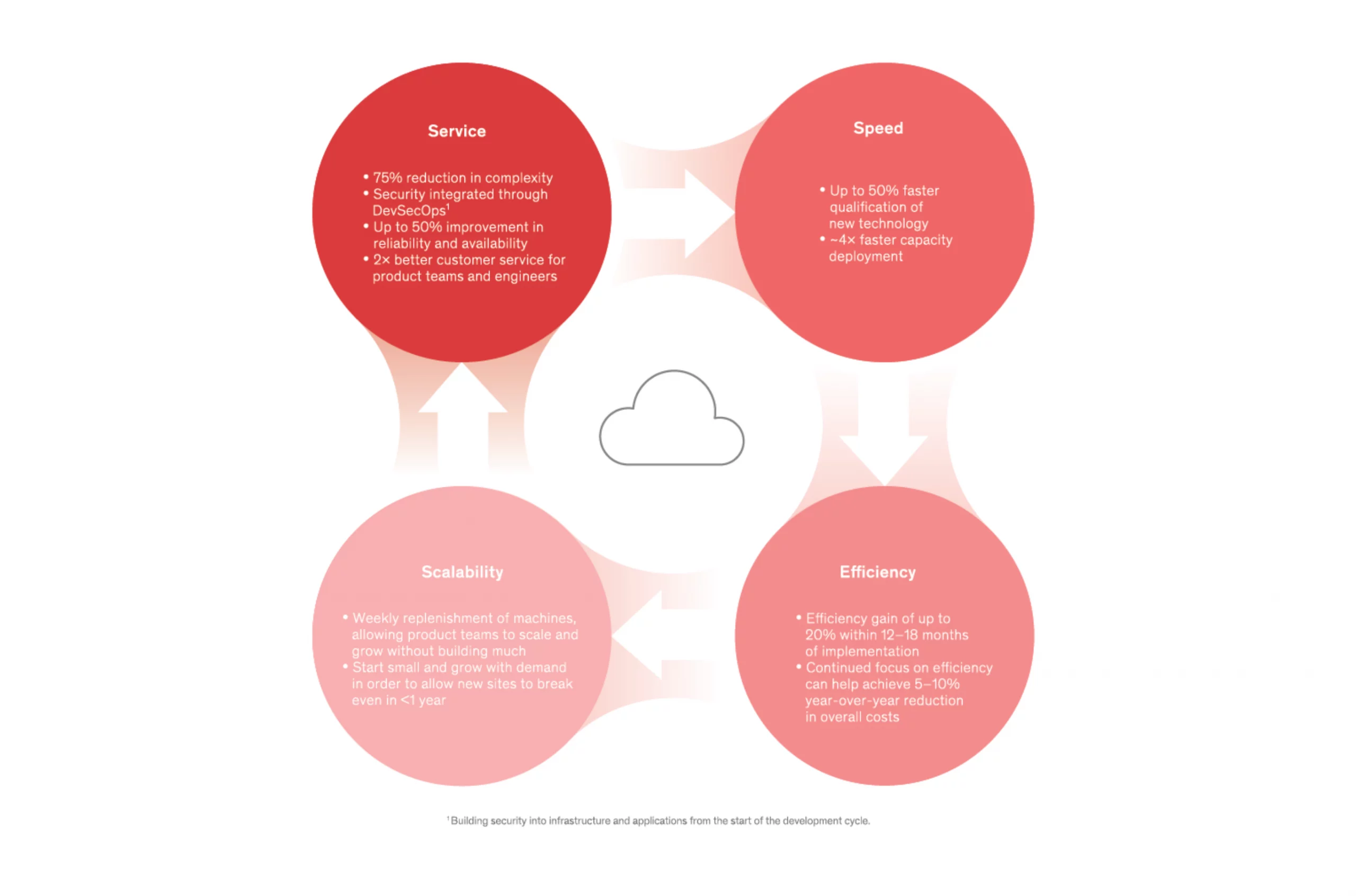

Overall, cloud computing is relatively simple regarding user-friendliness and data storage. Many providers boast cloud storage and other cloud-related perks for relatively low prices. The availability of cloud-hosting companies is encouraging businesses to invest by integrating or moving their legacy systems to the cloud. Migration to the cloud is one of the leading players in data modernization, without question.

Analytics Tools

The evolution of analytics tools is playing its part in the desire that many companies have to modernize their data. Overall, analytics and end-user reporting are better (and more sophisticated) than they have ever been before.

The addition of the Citizen Analyst role is prevalent in many modernizing companies that focus heavily on analytics. A Citizen Analyst is a person who is knowledgeable in analytics and machine learning (ML) systems and algorithms. Your CA, should you choose to have one on staff, will assist the modernization process by identifying profitable business opportunities.

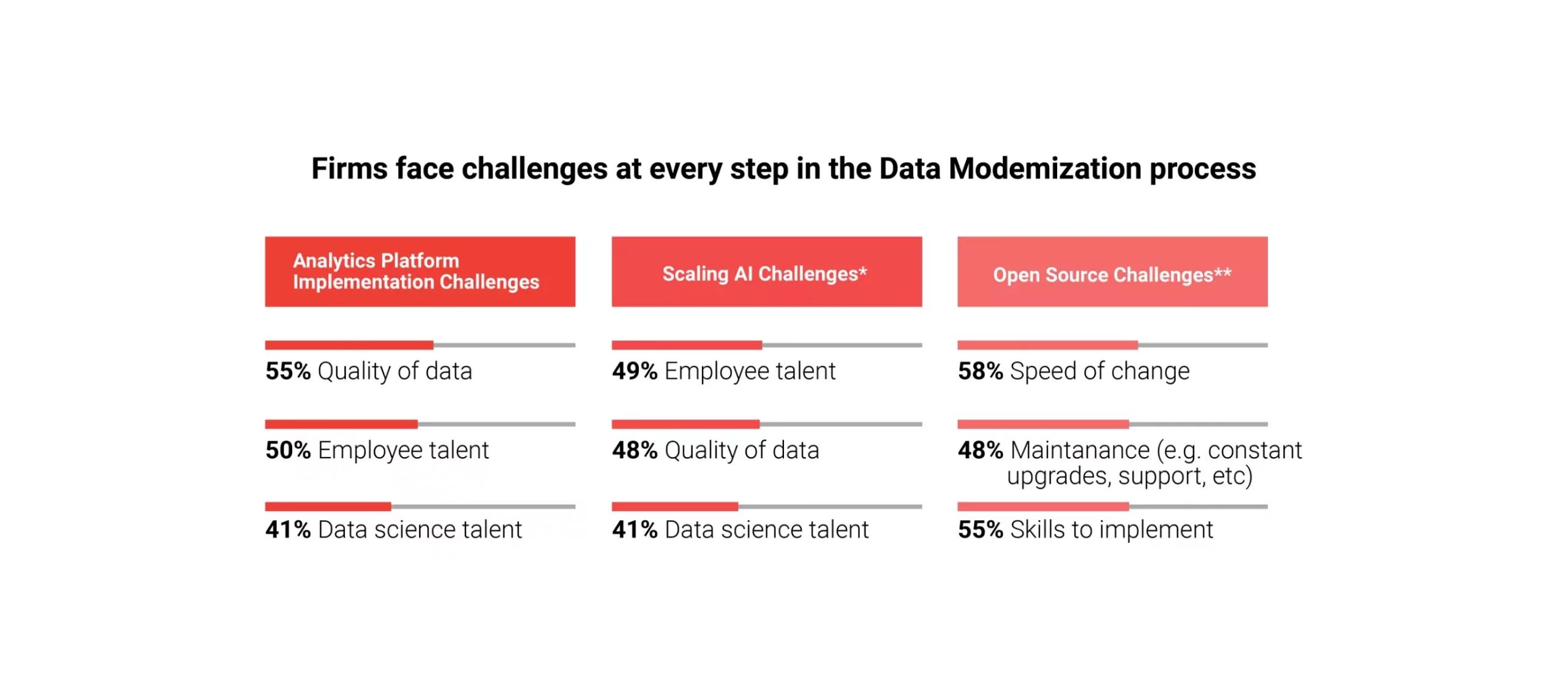

Data Challenges and Modernization Barriers

As the world races toward an even newer and more modern digital era, it’s clear that there are companies left behind. It was once possible to forego a presence on the internet as a business, but those days are long gone. To remain relevant and in line with, or above, your competitors, you have to focus on modernization and your customer journey.

Data challenges and modernization barriers are prevalent, but they don’t have to stop a business from being profitable digitally. However, it’s almost impossible to maintain profits while ignoring modernization.

Data Quality

We touched on this very briefly at the beginning of this article, but data quality is a massive hindrance regarding the mechanical aspects of modernization. Data issues, such as inconsistency and incompleteness, impact company migration to the cloud. Most of them stem from the inability to keep high-quality data both during and after the transition.

Data Sprawl

It can be incredibly challenging to integrate cloud data and on-premise data. The amount of various digital information created, collected, shared, stored, and analyzed by businesses make up their data sprawl. Depending on the size of the enterprise, the sheer size of this data may be overwhelming to move, primarily if you’re dealing with the data showing up as incomplete.

The Role of “Big Data”

Modernization through data strategy is a fantastic concept if properly embraced. Thousands of companies are not using a “big data” platform or data stored in a greater variety, with increasing volumes and more velocity.

This lack of use has nothing to do with the effectiveness of storing data on a “big data” platform. Instead, it suggests that companies have trouble finding the role that “big data” should play within their existing data. They know they have to modernize, but they don’t know where to start, and this state of overwhelm is one of the most significant data modernization challenges in existence.

Compliance Concerns

Data challenges are prevalent in the form of compliance concerns. With the consistent modernization and movement of primarily sensitive data, plenty of regulations and data protection mandates are rising to the surface.

Obviously, we need rules and regulations in place to protect sensitive data for businesses and consumers. However, many companies worry about the inability to meet ever-changing compliance regulations, potentially facing fines.

The need for regulated data safety isn’t going anywhere anytime soon, so companies must find a way to comply if they’re going to focus on digital modernization and the up-leveling of their business. Regardless of your feelings on the topic, there’s no question that it’s definitely a challenge for data modernization.

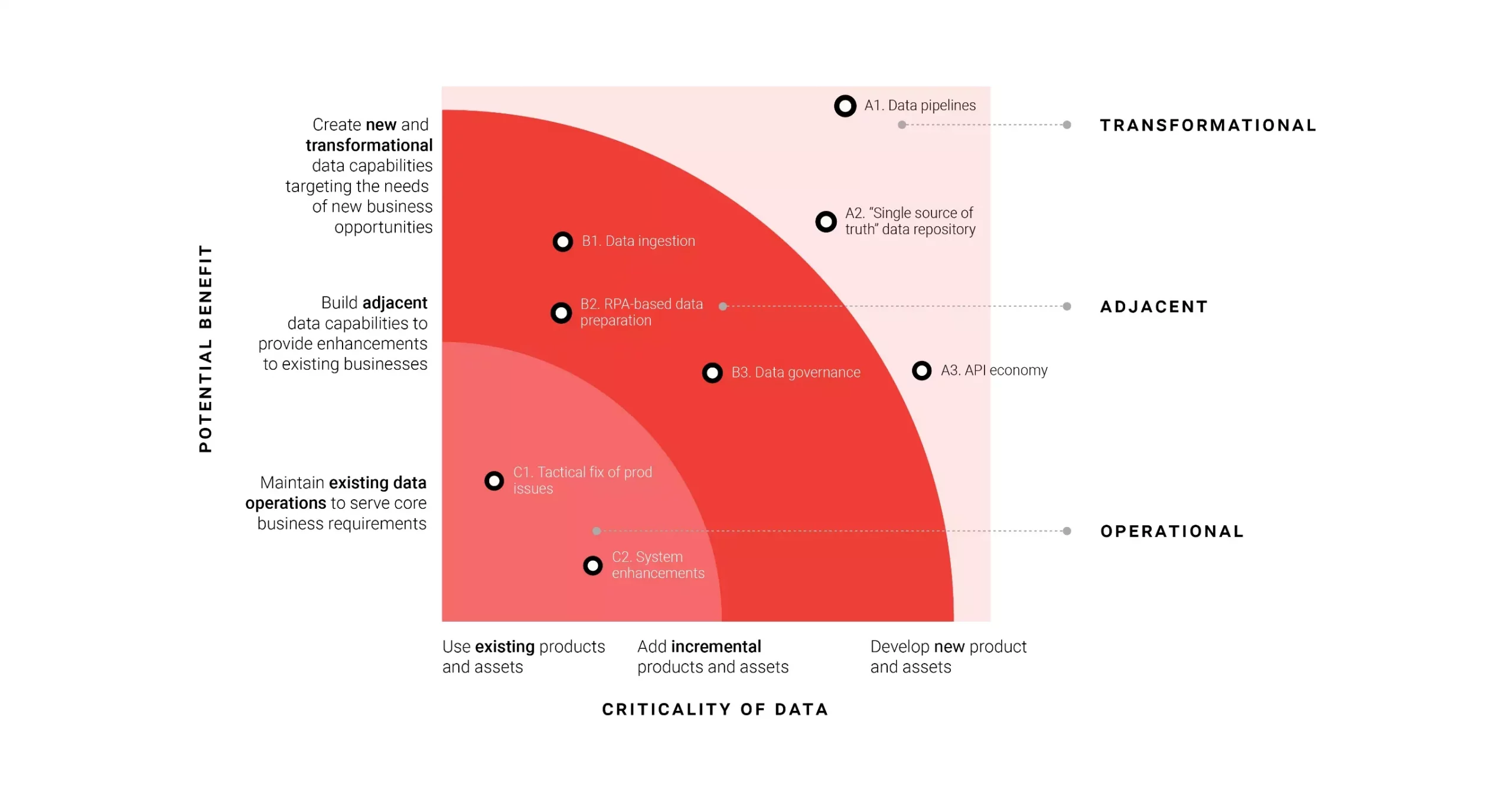

Successfully Modernizing Data

Harnessing the power of your current (and ever-growing) database is essential to achieving growth and excellence in your business operations. Successfully modernizing legacy systems means complying with mandates, enabling priceless analytics for your company, and providing a fantastic consumer experience.

Modernization barriers tend to come in the same form for every business, but this doesn’t mean you can’t succeed at launching a digital revolution. However, you’ll have to clear a few roadblocks (other than data quality) along the way.

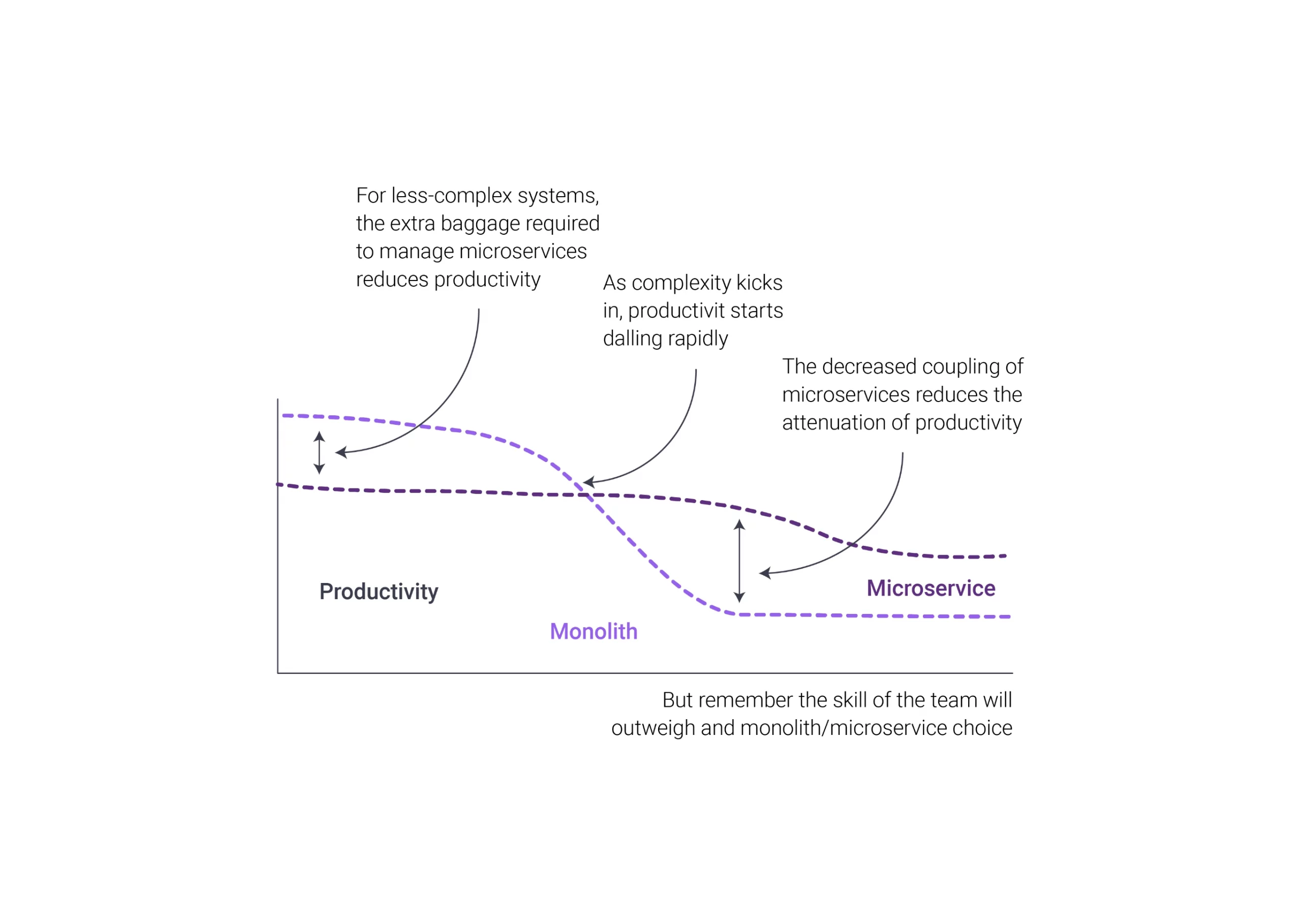

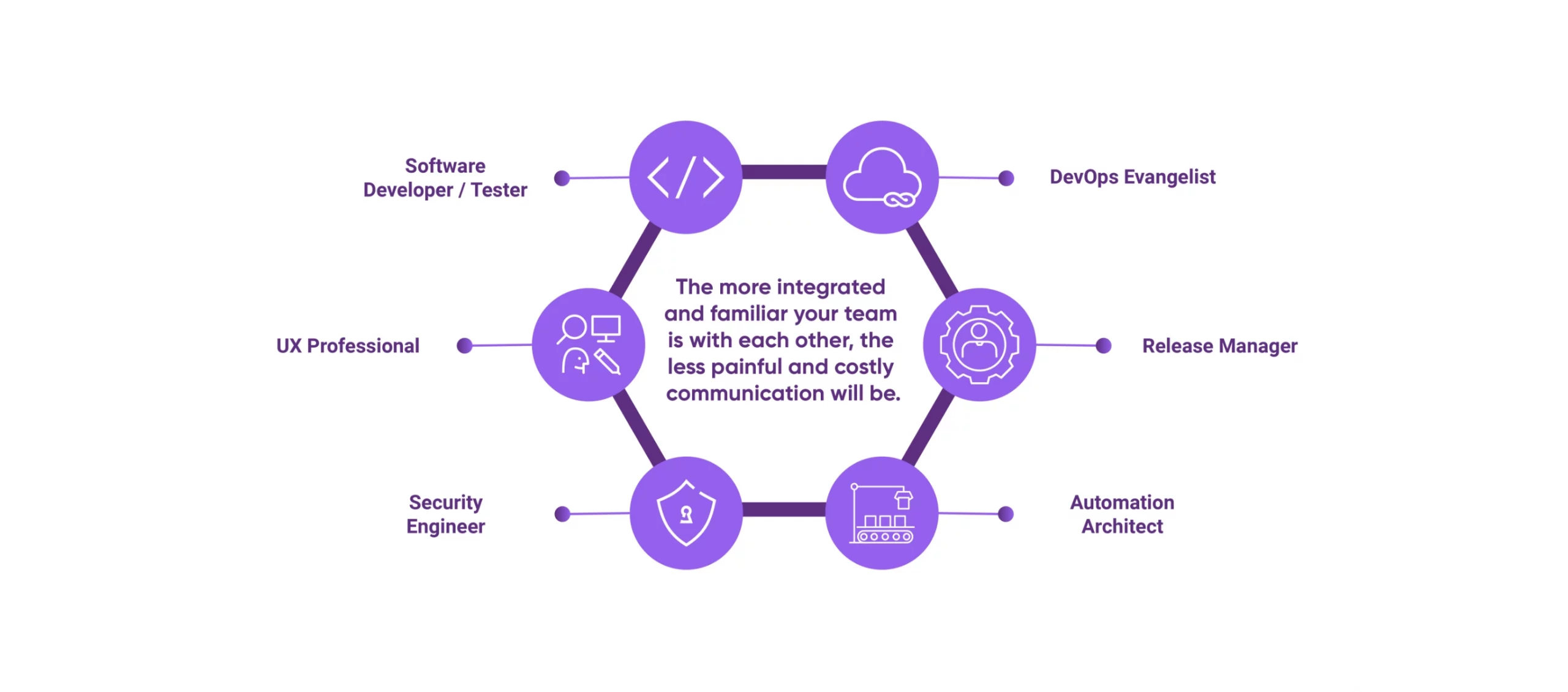

Misaligned Employee Skills

More often than not, the current skill set of your employees does not align with your data management needs. Everyone struggles (to a certain degree) to find talent for their workforce. When it comes to data modernization, the amount of knowledge your employees have or don’t have can directly impact data management and the implementation of new solutions.

For example, you’ve hit a wall if you’re attempting to employ an advanced analytics platform that your employees do not have the skill set to use. Data Science professionals are essential to data modernization, so this is a problem for many companies.

Open-Source Hurdles

Even though open-source tools open the world of data modernization to almost everyone, many businesses are too wrapped up in security concerns to consider using them. When utilizing an open-source platform, the speed of change is significant, affecting the entire organization if everyone is operating on different pages.

Digital modernization requires company-wide support and effort. Maintenance is also a challenge for open-source, as is the implementation of the applications. As you can see, workplace talent is crucial to pulling off successful data modernization.

Early Stages of Basic Solutions

The most basic data storage solutions are in the (very) early development stages for many businesses, which is quite troublesome. Data lakes and data governance tools are foundational for data-driven companies. Still, because so many of these businesses are in the early stages of fundamental data storage, problems are sure to arise regarding the ability to move forward to a more modernized approach. They’re simply not ready.

Unsatisfied with Implementation

If there’s one thing that many companies have learned throughout attempting to modernize their data, purchasing or downloading the framework to upgrade the way you keep your digital information doesn’t automatically mean you have a complete solution.

Many organizations remain unhappy with the way their data tools are governed or implemented and their analytics platforms and data lakes. It’s not to say that this dissatisfaction comes from the tool itself, but instead that it lacks the ability to meet the needs of the business.

Our Recommendations

With so many companies stuck in the middle of a digital modernization mess, we understand that the bottom line for businesses is to have access to systems that show results immediately. However, technology cannot solve your problems on its own.

To get the best out of data modernization, we suggest:

- Test emerging technology as it evolves at a rapid pace. The technology you implement today could become obsolete within the next five years, so select a provider that stays in tune with these changes.

- Put the cloud at the center of your modernization strategy, as it’s designed to deal with operational workloads and analytics with high levels of security.

Choose to work with a provider that focuses on the priorities and initiatives of your business. Tangible results come from providers that understand outcomes.

Overcoming Data Modernization Challenges

It’s frustrating to sit in the middle of operating on old legacy systems and attempting to modernize your data with neither end of the spectrum working in your favor. Your best bet is to partner with a service provider that can focus on results while building hybrid strategies.

There are too many benefits to organizing and digitizing your data to work for your business, contributing to growth instead of simply existing for reference. Data modernization can’t be ignored, so ensure that you’re taking the right path.

Recent Comments