Introduction

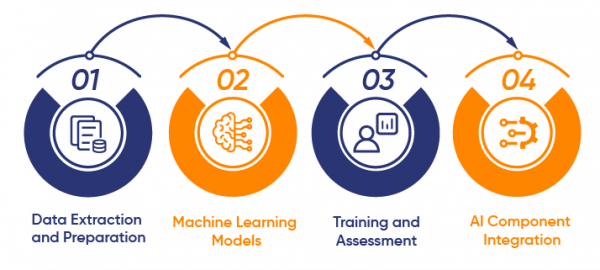

The key components of building Intelligent Applications

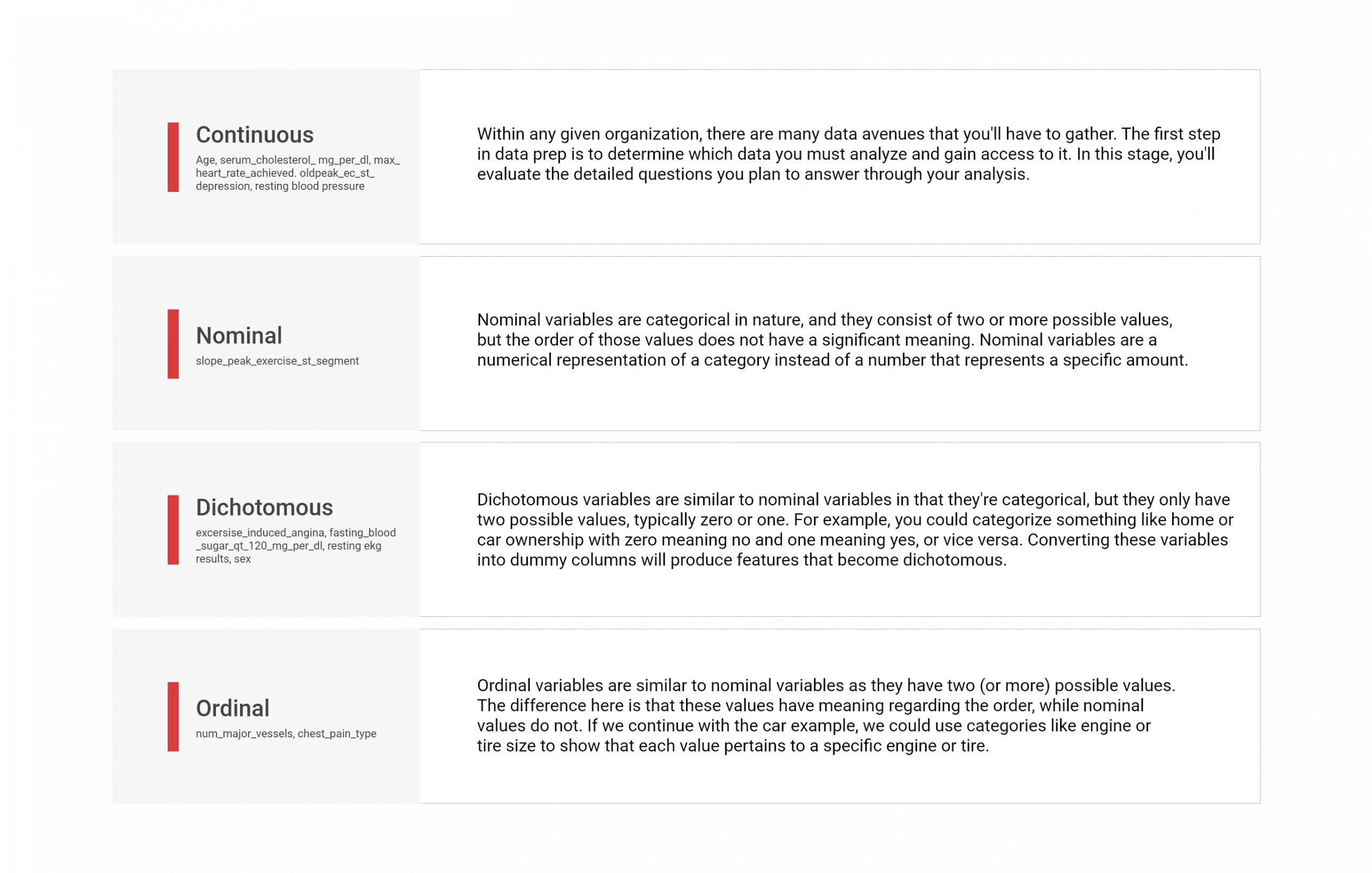

1. Data Extraction and Preparation

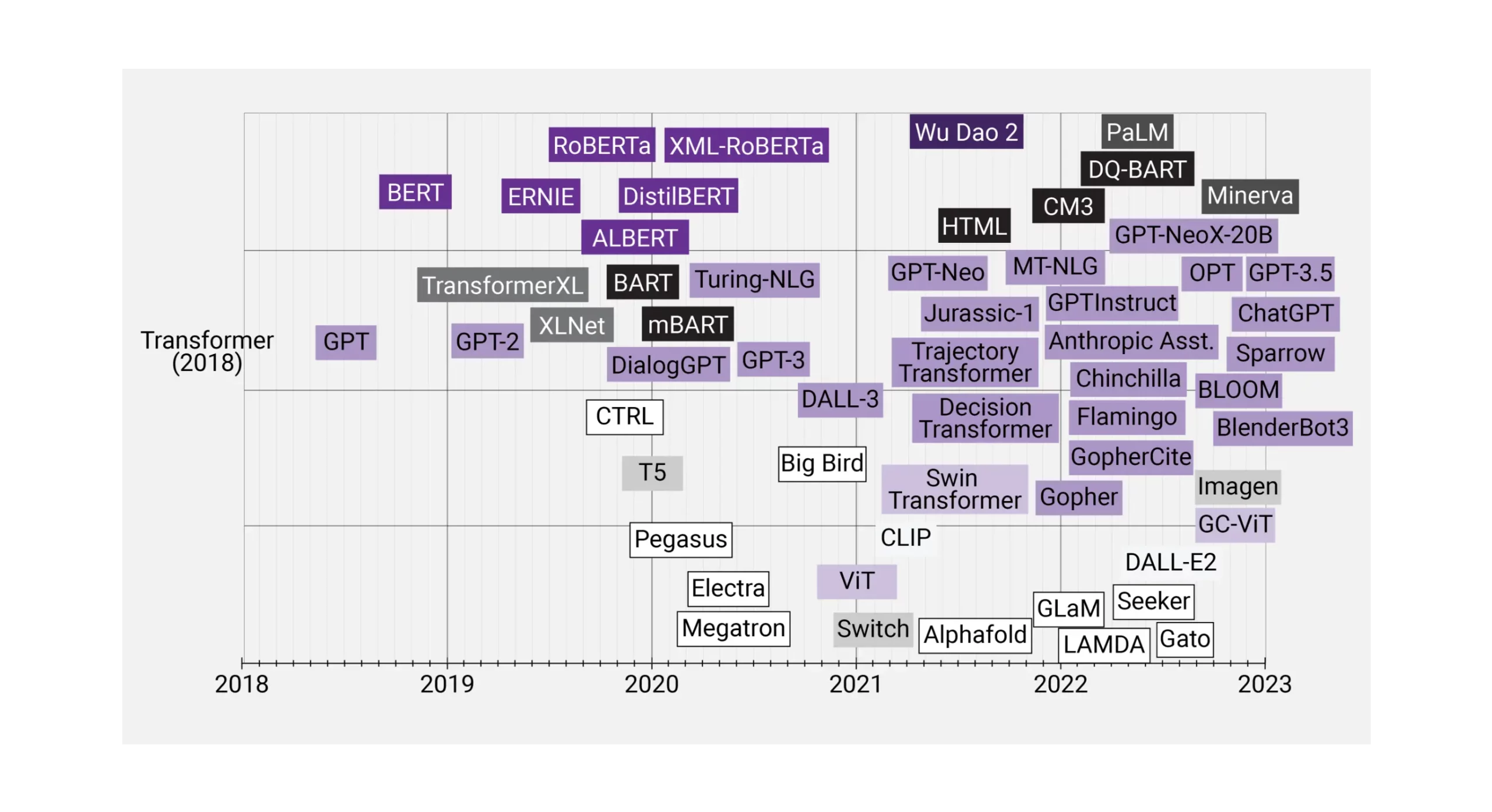

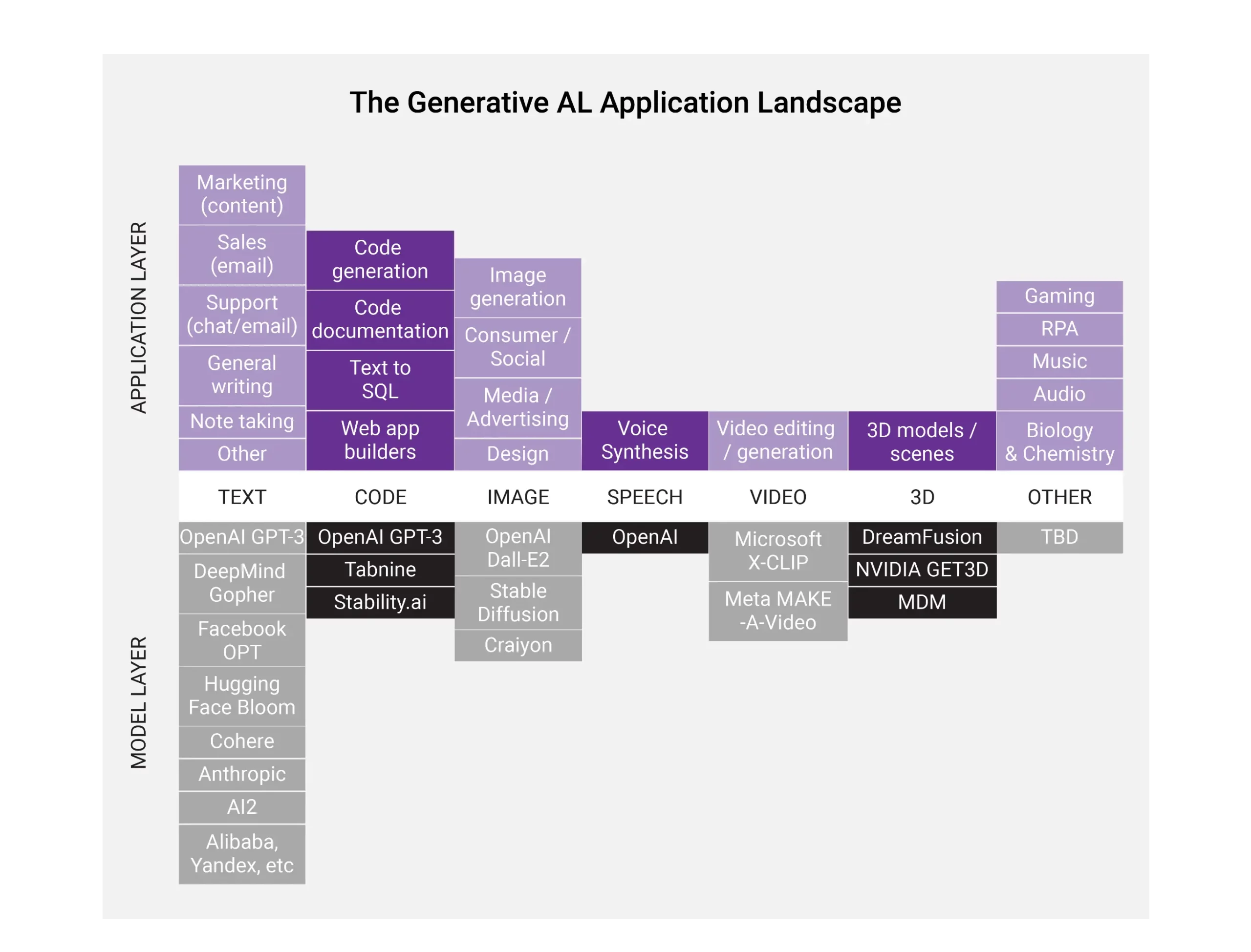

2. Machine Learning Models

3. Training and Assessment

4. AI Component Integration

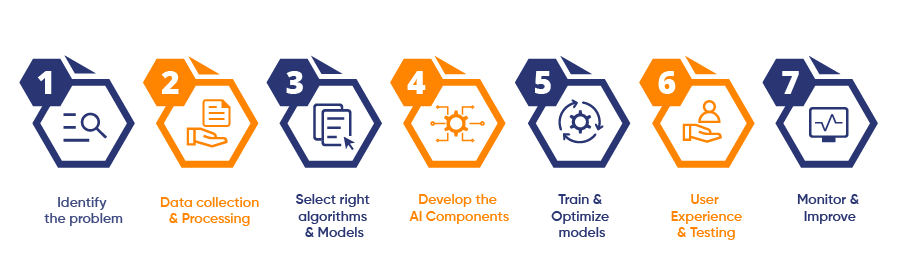

Building Intelligent Applications — Step by Step

1. Identify the problem

- Identify the Problem

- Understand the Business Operational Purposes

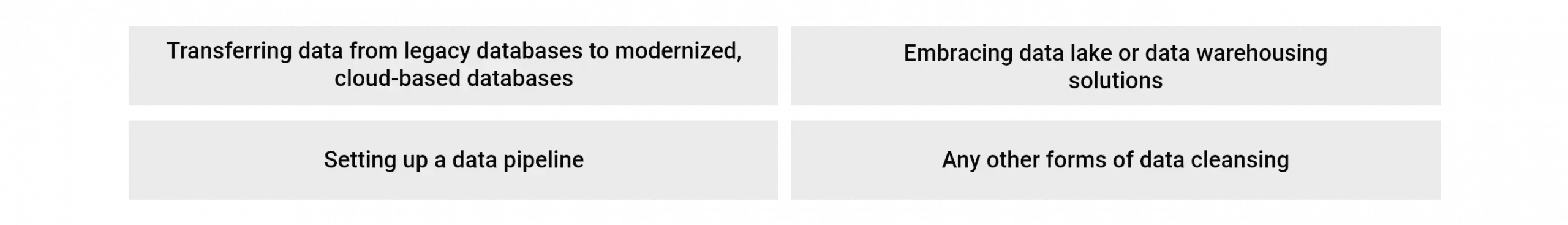

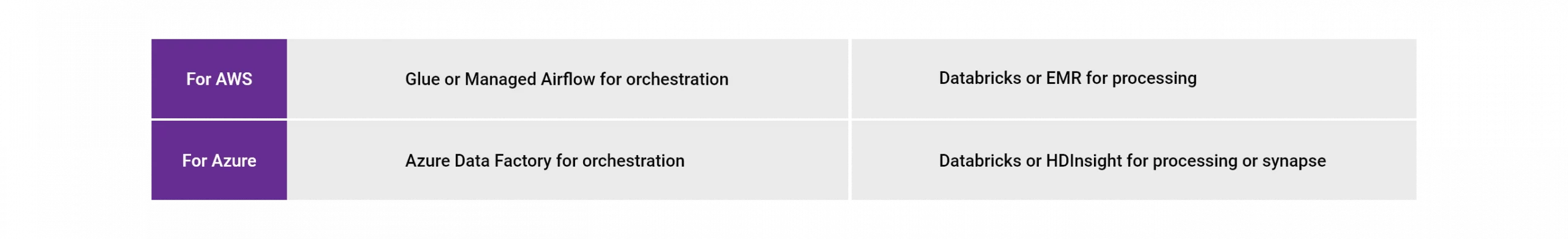

2. Data Collection and Processing

- Real-time Data Gathering

- Data Pool Support

3. Select the Right Algorithms and Models

- Machine Learning Tools

- Deep Learning and Neural Networks

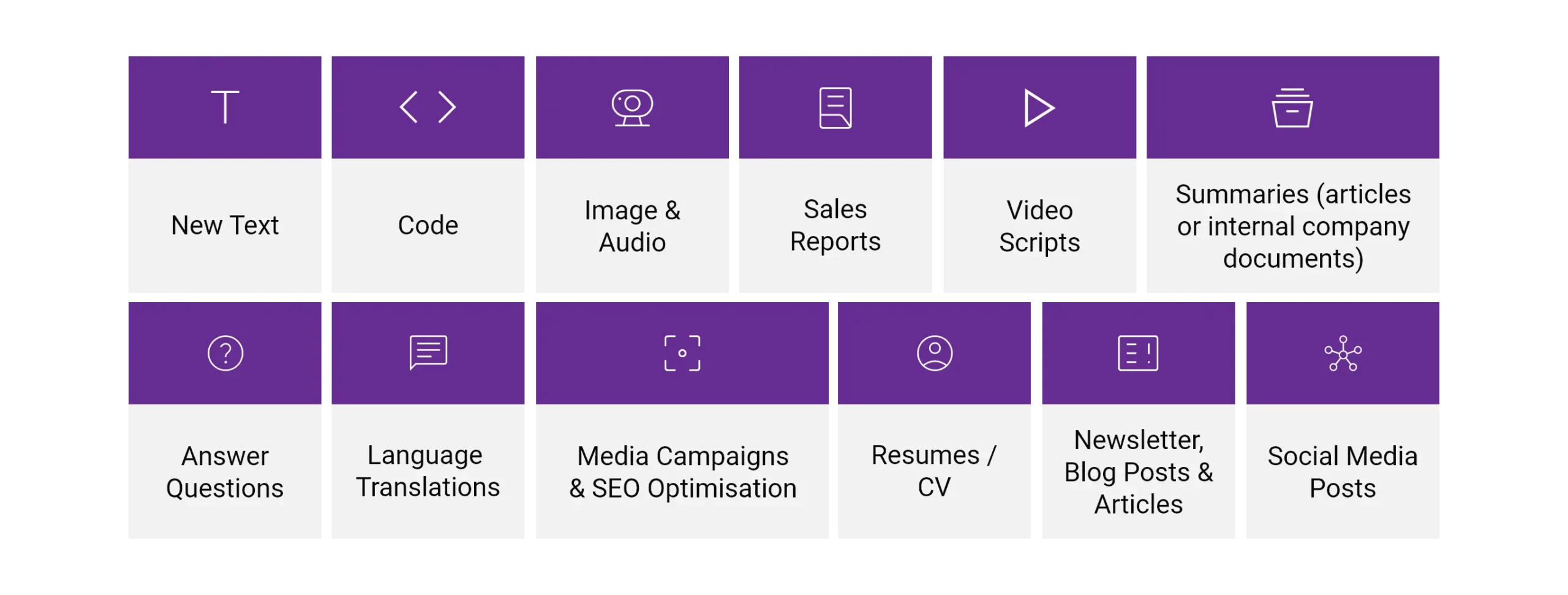

4. Develop the AI Components

- Cognitive APIs

- Low-Code Platforms

5. Train and Optimize Models

- Usability Testing

- AI-Driven Design Systems

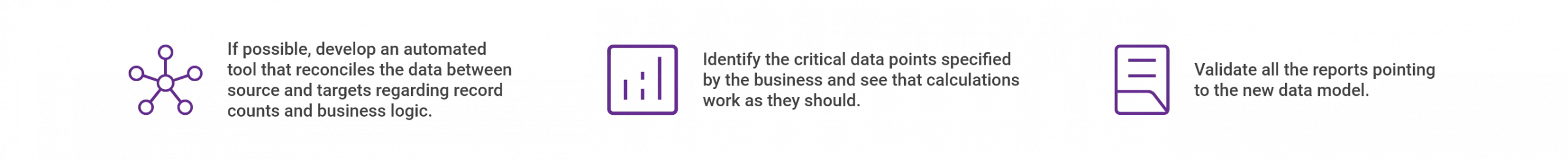

6. User Experience and Testing

- User-Centric Approach

- Make Use of the Python Dictionary

7. Monitor and Improve

- AI and Analytical Technologies

- Best Practices for AI-powered Mobile App Development

Examples of AI-enhanced Applications

1. Sales Forecasting

One of the areas that AI can enhance is sales forecasting. With AI, businesses can process large amounts of data and provide accurate predictions of customer behavior. Sales teams can use these insights to tailor their strategies and improve their ROI. By integrating AI-based forecasting tools into sales applications, businesses can gain an edge over their competition.

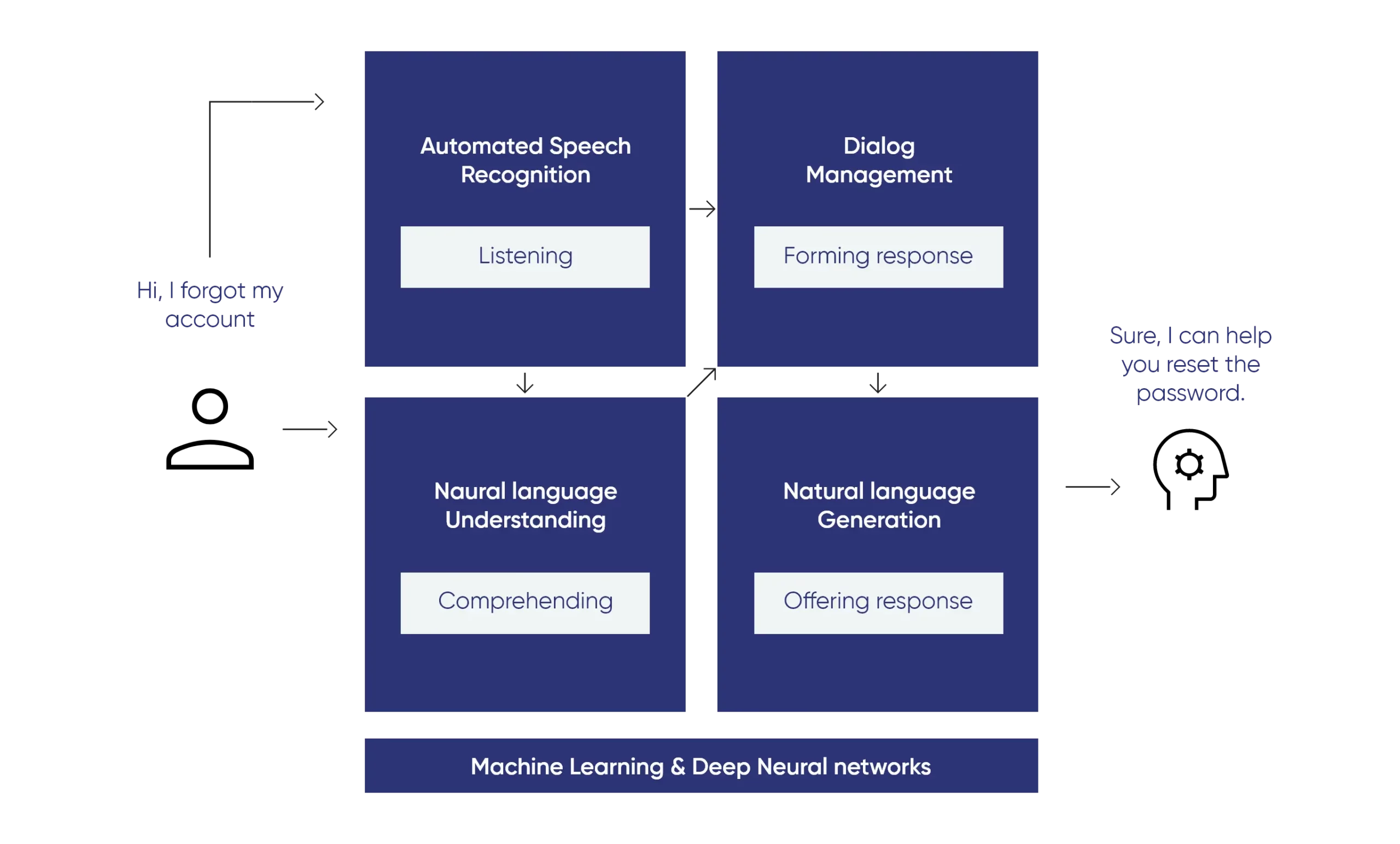

2. Customer Service

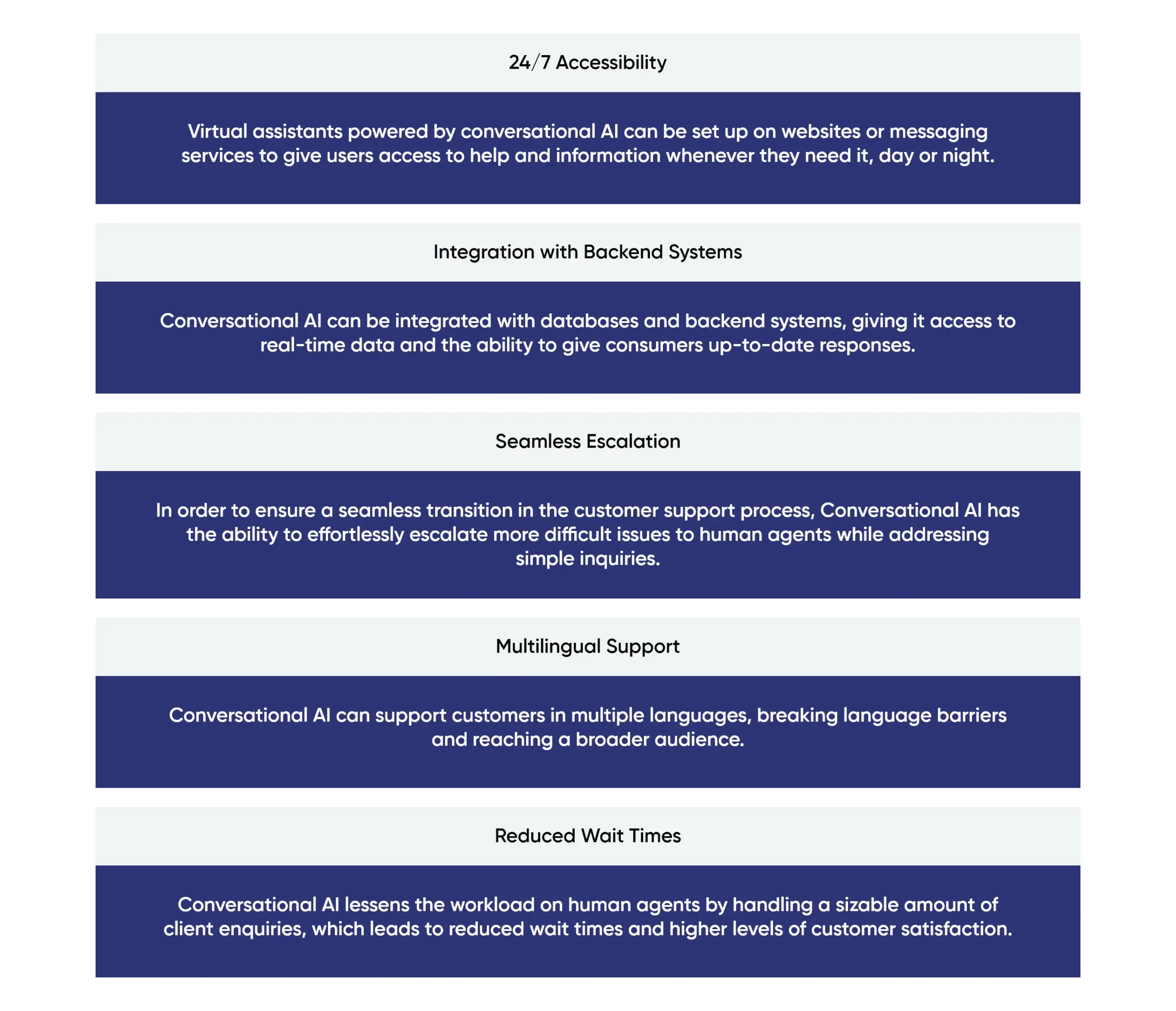

AI can be used to enhance customer service applications by providing intelligent chatbots that can quickly respond to customer inquiries. For example, a chatbot could learn from previous customer interactions and provide more personalized responses. As a result, businesses can improve their customer satisfaction rates and reduce the workload on their customer service departments.

3. Fraud Detection

AI can be used to enhance fraud detection in financial applications. Machine learning algorithms can be trained on vast amounts of historical data to detect patterns of fraudulent behavior. The application could flag suspicious transactions and alert investigators. AI-based fraud detection can help organizations to avoid fraud-related losses.

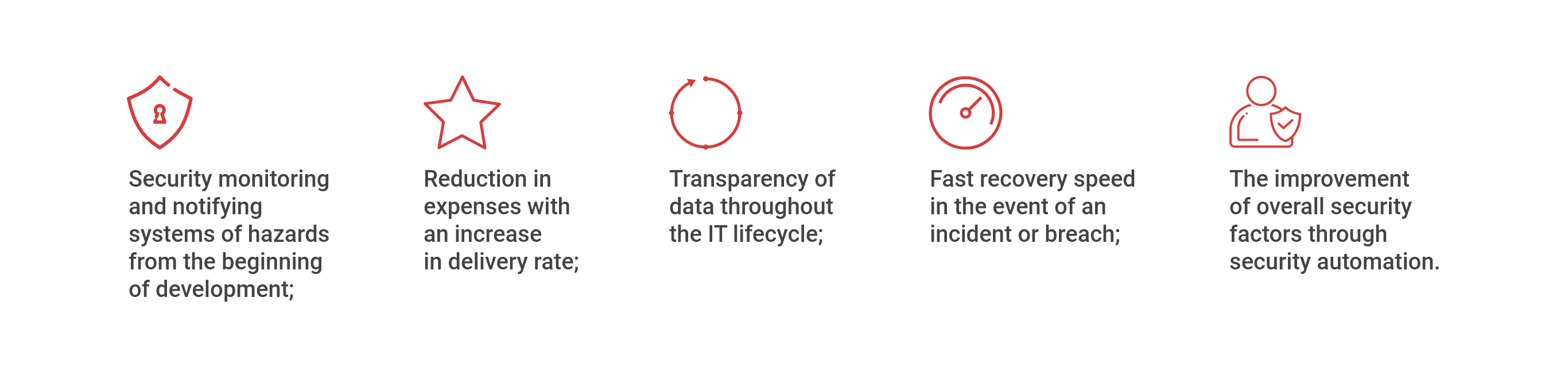

4. Cybersecurity

AI can also enhance cybersecurity by detecting and preventing attacks in real time. An AI-based cybersecurity application could detect anomalous behavior or signs of a data breach by analyzing data from various sources. The AI could block the attack and alert the security team to investigate further.

5. Personal Assistants

Siri, Google Assistant, and Alexa leverage AI, machine learning (ML), and natural language processing (NLP) to understand user commands, answer questions, provide recommendations, and control smart home devices, making everyday life more convenient and efficient.

6. Product Recommendations

AI can also enhance e-commerce applications in various ways. For instance, an application could use machine learning algorithms to analyze customer data and provide product recommendations based on their purchasing history, browsing behavior, or other relevant data points. Personalized product recommendations drive sales and improve customer loyalty.

Conclusion

Intelligent Apps provide unparalleled functionality, improved customer experience, and the potential for enhanced revenue generation. However, achieving the benefits requires understanding the technologies and methodologies relevant to Intelligent App development.

The software industry’s future lies in developing Intelligent Apps that help enterprises gain a competitive advantage in their respective industries. This guide provides a starting point for those seeking to create Intelligent Apps while highlighting the value of these technologies when appropriately integrated within an organization.

Recent Comments