The world of financial services is going through a lot of changes as a result of technological improvements and digitization. The banking industry is heavily reliant on technologically enhanced products, and in order to provide high-quality client service, it is crucial that these products be reliable and performant. Additionally, it is essential that all operations carried out by banking software proceed without hitches and without errors to guarantee safe and secure transactions, this raises the need for effective software testing strategies for the financial sector.

Applications created for the banking and financial industries typically have to adhere to a fairly tight set of standards. It results from the necessity of addressing the legal requirements that financial institutions must adhere to. Because they have power over the clients’ money. All these criteria, as well as the fundamental functional needs of banking software, should be taken into account when evaluating banking software.

Why do we need software testing in the financial sector?

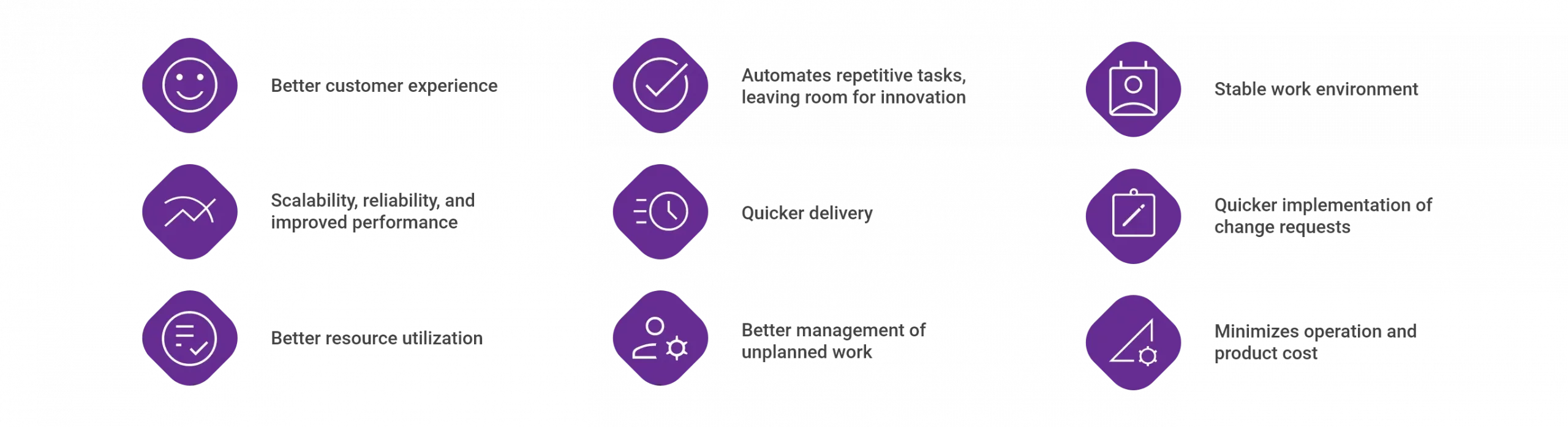

The payment procedure could end in disaster if there are flaws or failures at any point. Hackers may be able to access and utilize private user data if a financial software program has a weakness. This is why financial institutions should place a high priority on end-to-end testing. It guarantees a great user experience, customer safety, program functionality, enough load time, and data integrity. For a variety of reasons, the financial sector needs software testing:

Regulatory reporting

Financial firms frequently have to submit reports and audits to regulatory agencies in order to comply with regulations. Effective software testing ensures the required data is correct, comprehensive, and accessible for reporting needs. By implementing effective testing practices, organizations can confidently comply with regulatory reporting obligations and avoid fines or legal repercussions.

Customer satisfaction

Financial organizations heavily depend on customer trust and satisfaction. Customer churn can be caused by malfunctioning software, transaction mistakes, or security breaches. An effortless and satisfying user experience is made possible by effective software testing, which helps find and fix problems before they affect customers. Financial institutions may preserve consumer confidence and contentment by providing dependable and secure software.

Cost savings

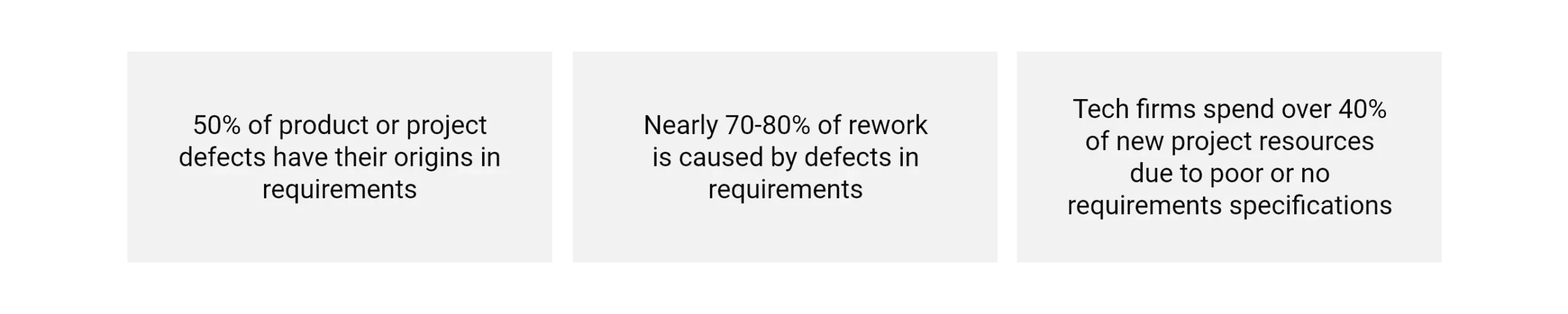

Resolving bugs early in the software development lifecycle often results in lower costs than doing so after they have been discovered in use. Software testing aids in the early identification of problems, lowering the cost of rework, system downtime, and assistance for customers. Organizations can optimize their infrastructure and resource allocation by using it to find performance bottlenecks and scalability problems.

Risk mitigation

The financial industry is intrinsically fraught with risks. Program testing helps to reduce these risks by verifying that the program performs complicated financial computations and transactions accurately and correctly. It assists in identifying and resolving possible problems that can lead to monetary losses, reputational harm, or non-compliance with risk management procedures.

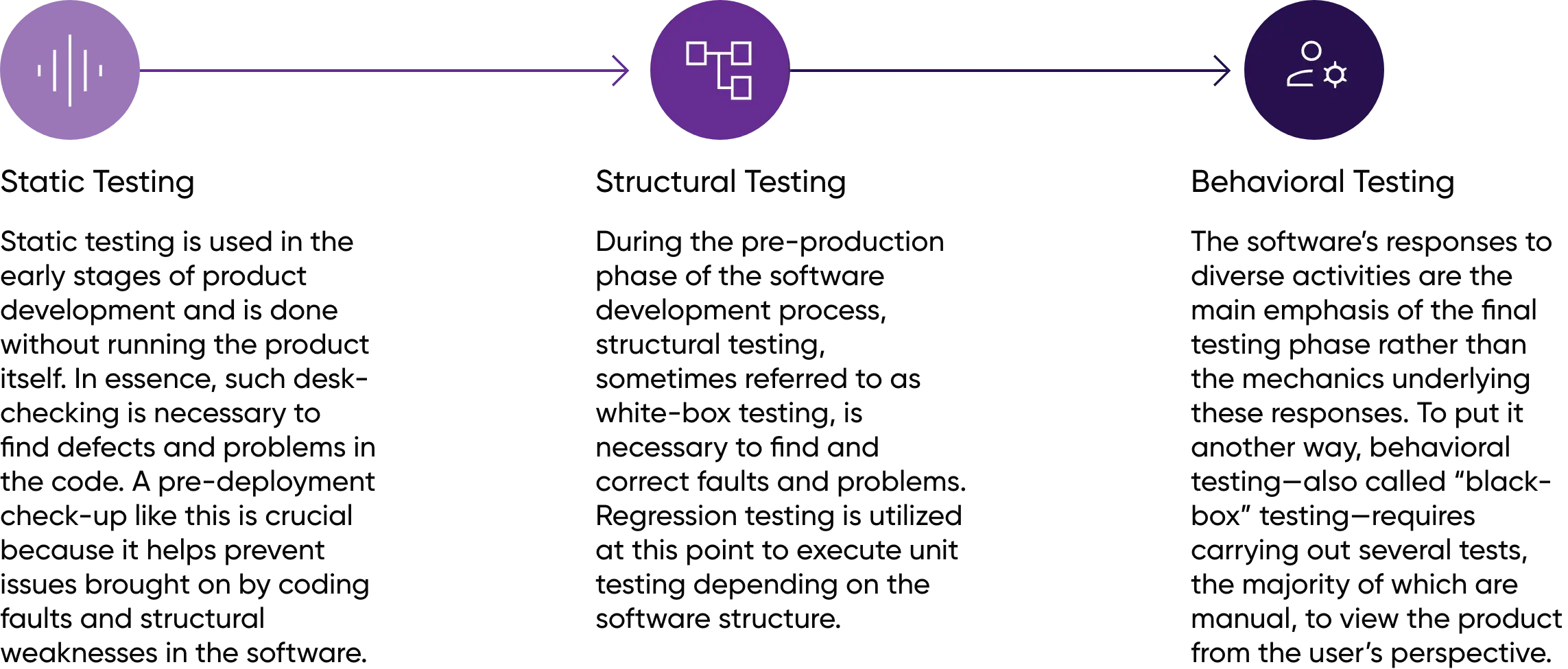

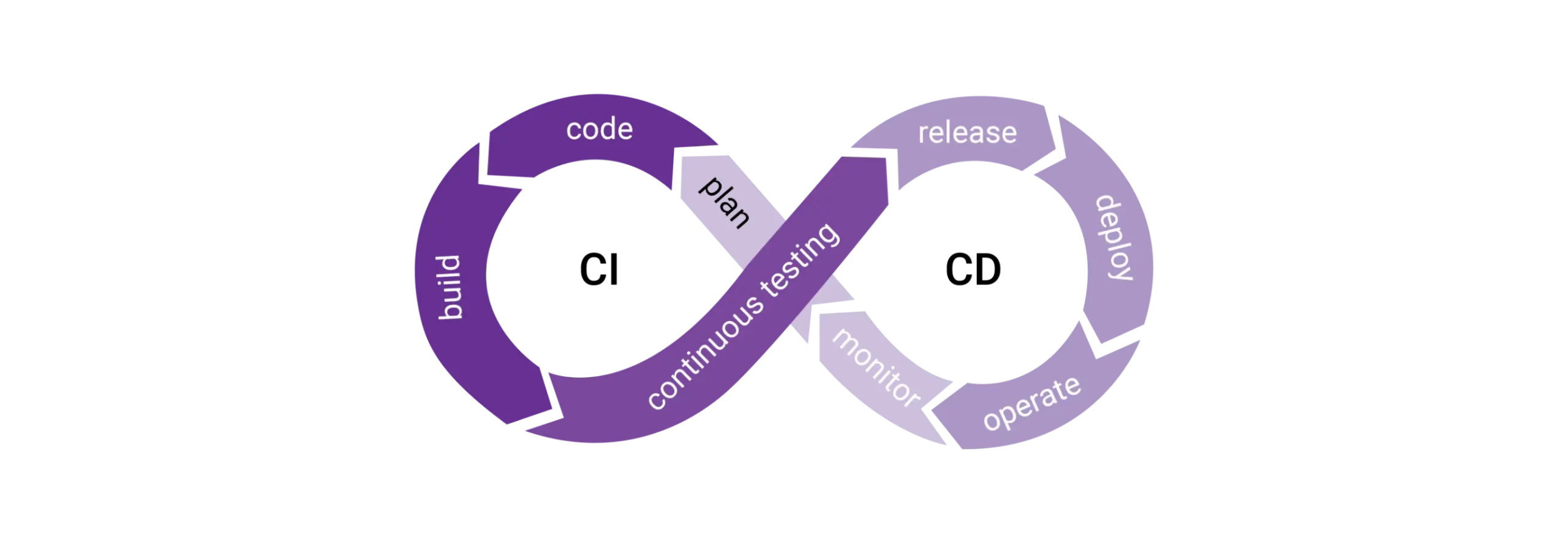

What are the stages in software testing?

When testing software, there are three main stages:

What Software Testing Strategies can be used in the financial sector?

Stress testing

Security testing

Conclusion

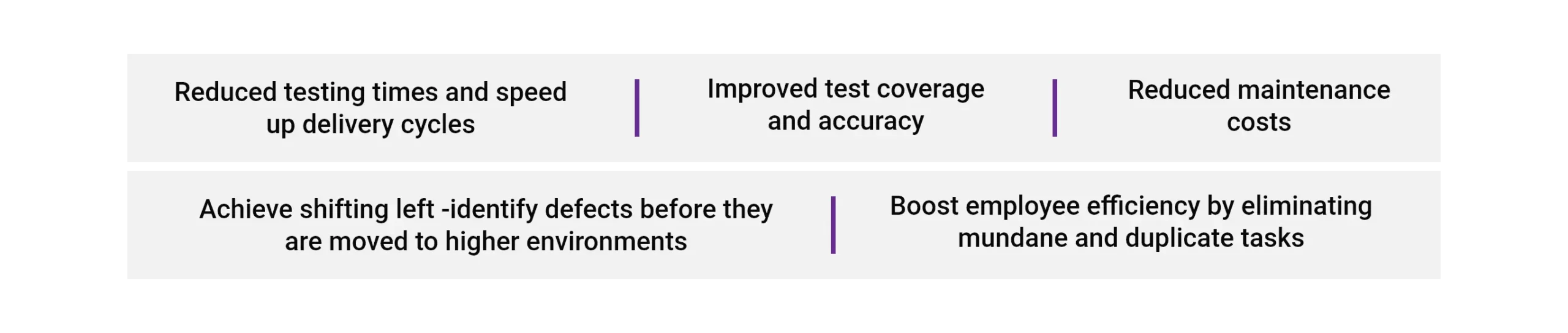

Given the sensitivity of handling clients’ financial transactions, evaluating banking software and procedures is of the utmost importance. It necessitates technical mastery and a highly skilled team. Various software testing strategies, like security testing, performance testing, accessibility testing, API testing, and database testing, are essential alongside automated testing to guarantee the creation of error-free and superior apps.

Partnering with a professional software testing service provider like TVS Next might have considerable advantages for achieving thorough testing coverage and ensuring the greatest degree of quality assurance.

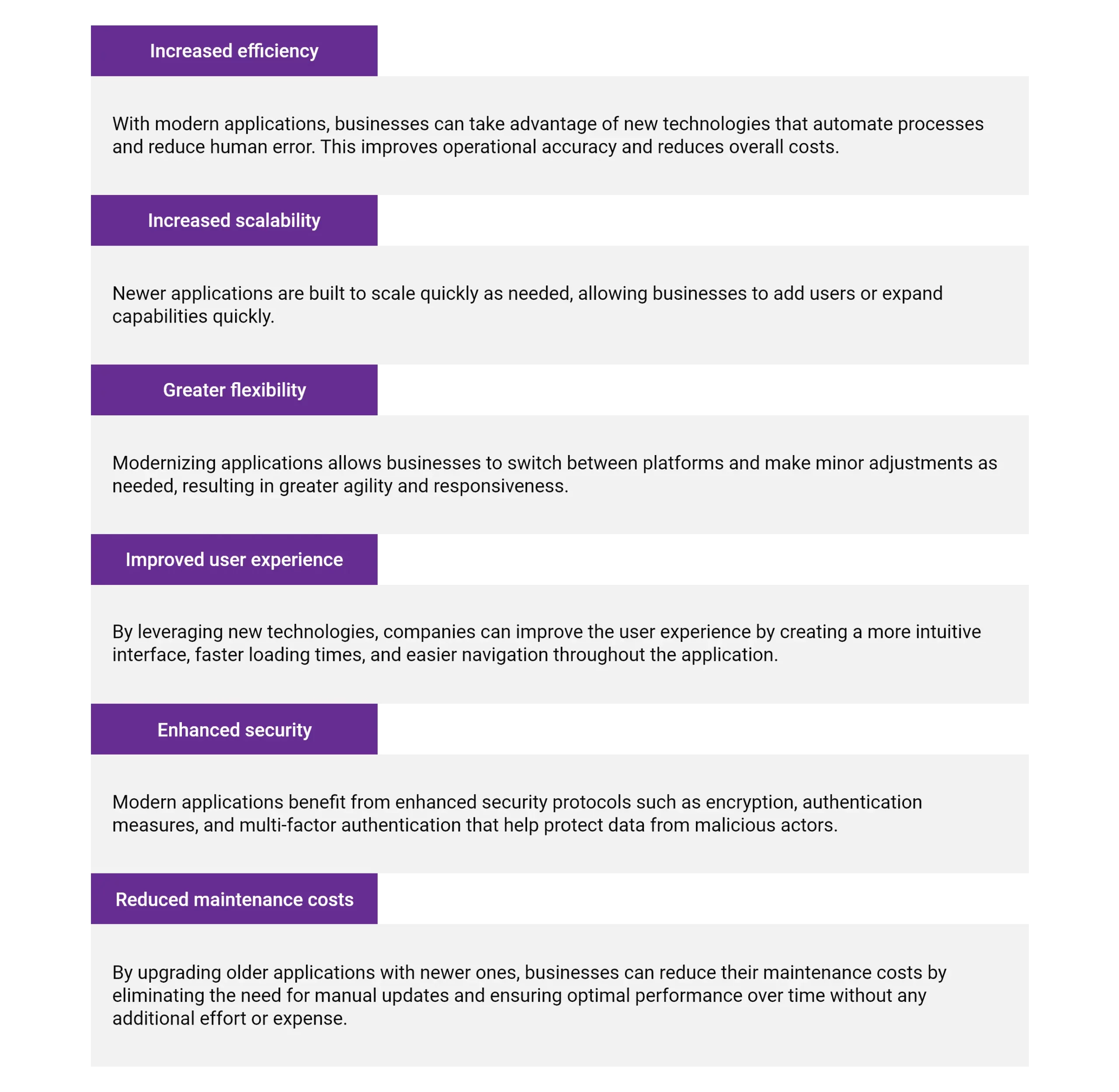

Ultimately, the best approach is to consult with experts who can offer guidance on how you can successfully modernize your applications while minimizing disruptions and downtime.

Ultimately, the best approach is to consult with experts who can offer guidance on how you can successfully modernize your applications while minimizing disruptions and downtime.

Recent Comments